NVIDIA Jetson Project of the Month: Recognizing Birds by Sound

It is one thing to identify a bird in the wild based on how it appears. It is quite another to identify that same bird based solely on how it sounds. Unless you are a skilled birder having a Big Year, identifying birds by sound is likely to be quite challenging.

A group of mathematics, computer science, and biology researchers from the University of Marburg in Germany devised a way to quickly identify birds and monitor local biodiversity. They used audio recordings captured with portable devices connected to the NVIDIA Jetson Nano Developer Kit.

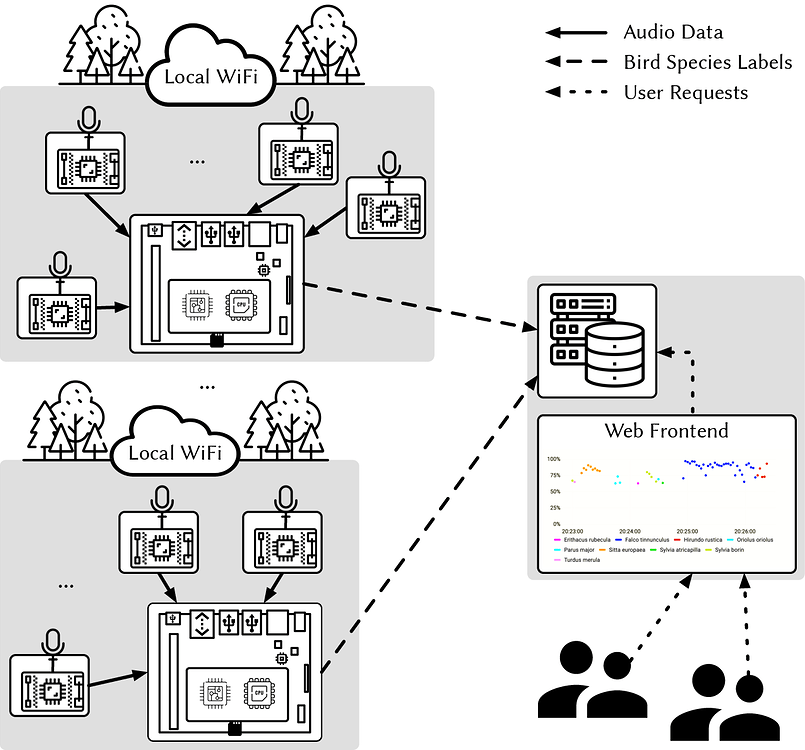

According to the researchers, the Bird@Edge project is an edge AI system that is “based on embedded edge devices operating in a distributed system to enable efficient, continuous evaluation of soundscapes recorded in forests.” To learn more, see Bird@Edge: Bird Species Recognition at the Edge.

The Bird@Edge project

Using multiple ESP32-based microphones (Bird@Edge mics), the researchers streamed bird sounds to a local Bird@Edge station for species recognition. Each microphone had a Wi-Fi radius of 50 meters.

This setup was deployed in a local forest, so the researchers carefully considered Wi-Fi transmission of the audio streams, as well as GPU performance. The team determined that they could connect up to 10 microphones to a single station. The results obtained from the stations were then transmitted to a backend cloud for further analysis by biodiversity researchers on the team.

“We were able to still have some processing capacity on the Jetson Nano GPU running our model,” researcher Jonas Höchst said. “We were also able to use a custom low-power profile for the Jetson Nano, which indicates the GPU itself still has some processing capacity.”

With the Bird@Edge project, bird species can be quickly identified by sound alone. “The delay between the bird song being recorded by a microphone and its visualization is on the order of seconds, compared to days with traditional approaches,” according to the researchers.

Figure 1. The Bird@Edge system captures bird sounds in the forest and transmits the information to an edge server for analysis

Figure 1. The Bird@Edge system captures bird sounds in the forest and transmits the information to an edge server for analysis

Bird@Edge system software and AI

To recognize and identify the bird species based on the sound recordings, the team developed a deep neural network (DNN) based on the EfficientNet-B3 architecture and trained using TensorFlow. The model was optimized using NVIDIA TensorRT and deployed with the NVIDIA DeepStream SDK.

This approach enabled the team to build the AI-powered app in a way that supports multiple live streams on the Jetson Nano GPU. The algorithm was trained to recognize any of the 82 bird species found in the university-owned forest in Marburg, but could be extended to further species if required.

The Jetson Nano runs a Bird@Edge daemon that is in charge of discovering the microphones, as well as instantiating and running the AI processing pipeline. The results are communicated through a cellular network to the Bird@Edge server, and stored in an InfluxDB database. This is connected to Grafana, so the researchers can then visualize the data using a web interface.

The team demonstrated that their DNN “outperforms the state-of-the-art BirdNET neural network on several datasets and achieves a recognition quality of up to 95.2% mean average precision on soundscape recordings.” See Bird@Edge: Bird Species Recognition at the Edge for more details.

The system can also identify less common bird species. “We are currently working with a voluntary ornithologist who is collecting birdsongs of uncommon and therefore less covered bird species, which further improves our approach,” Höchst said.

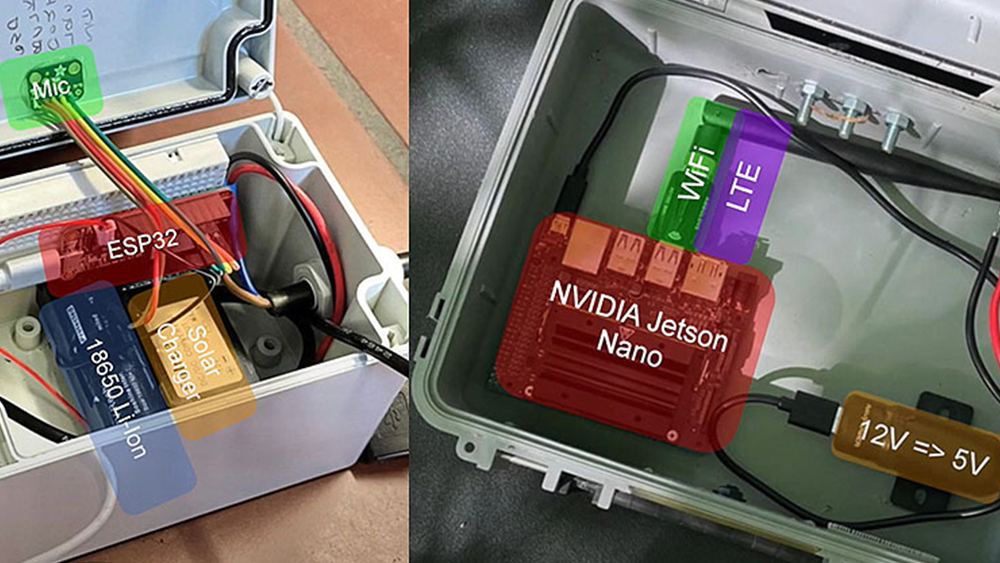

Bird@Edge system hardware

The Bird@Edge tool is based on embedded edge devices operating in a distributed system, including:

- Bird@Edge microphones: Knowles microphones compatible with ESP32 SOCs for communication through Bluetooth or Wi-Fi, and an affordable battery cell.

- Bird@Edge station: Captures audio recordings from incoming streams and performs inference for each microphone deployed. The station is a small portable case that includes the Jetson Nano, Wi-Fi, modem, voltage converter, and a small solar charger.

- Bird@Edge server: Sound recordings are transmitted using Grafana to dynamically render the insights generated in a visual manner.

Given that the Bird@Edge stations were set up in the forest, the team needed to ensure that each station could operate efficiently and without frequently recharging the battery. The hardware of the microphones and the edge stations was harmonized for energy efficiency.

The team was able to create an efficient energy profile for the station that required just 3.16 watts and could last for nearly 2 weeks without a battery recharge. Using the solar panel attached to the station, it can run continuously. The team found that the power consumption of the station does not change much, even when the number of microphones connected to it increases.

Video 1. Researchers discuss the Bird@Edge project

Tracking regional biodiversity

The research team sees this project as a means to more easily track regional biodiversity. Previously, if researchers wanted a finger on the pulse of a habitat’s health, they would have needed months of tedious work transcribing audio recordings by hand, and their results would take months to collect, analyze, and present.

Based on the work of Höchst and his teammates, environmental scientists around the world can now see in seconds which local bird species are present and gain immediate insight into the state of an ecosystem.

“Birds are important for many ecosystems, since they interconnect habitats, resources, and biological processes, and thus serve as important early warning bioindicators of an ecosystem’s health,” the researchers said.

Summary

Can we expect Bird@Edge to be available more broadly? According to Höchst, the team is thinking about ways to commercialize their system. “It is a challenge to go from a hand-assembled prototype that performs well in a controlled environment to a product that can be operated in large quantities without regular maintenance,” he said.

“However, we have already gained experience in the design and construction of hardware for the automated detection of VHF telemetry signals and are working on an easy-to-build and robust hardware design for an upcoming large-scale study.”

Additionally, since their paper was first released, the team has been developing a web service to make the use of sound recordings more accessible to a broad user base.

“On the one hand, this enables users to use existing audio recorders they already have in stock and upload their files to our web service in the cloud,” Höchst explained. “On the other hand, users receive direct feedback and can, for example, view the spectra of the uploaded files, manually verify the results, or report misclassifications in order to improve the underlying machine learning model.”

Additional resources

For more details, visit the NVIDIA Developer Forum Bird@Edge: Bird Species Recognition at the Edge. All software and hardware components used in the Bird@Edge project are open source and available through BirdEdge on GitHub.

Other projects that use AI to identify birds by sound include the Merlin Bird ID app, which is available for both iOS and Android devices. To learn more about environment-focused AI models, see DIY Urban AI: Researchers Drive Hyper-Local Climate Modeling Movement.

This article was written by Jason Black and initially published on the NVIDIA Technical Blog.